What data recovery tools to buy if you want to start a data recovery business?

Free video data recovery training on how to recover lost data from different hard drives?

Where to buy head and platter replacement tools at good prices?

Data recover case studies step by step guide

I want to attend professional data recovery training courses

From IBM official website, we learnt that NiLFS(2) and exofs can be the Next-generation Linux file systems with Advancing Linux file systems with logs and objects.

NiLFS(2) is one of numerous file systems that incorporate snapshot behavior. Other file systems that include snapshots are ZFS, LFS, and Ext3cow. NiLFS(2) is the second iteration of a log-structured file system developed in Japan by Nippon Telegraph and Telephone (NTT). The file system is under very active development, having recently entered the mainline Linux kernel (in addition to the NetBSD kernel). The first version of NILFS (version 1) appeared in 2005 but lacked any form of garbage collection. In mid-2007, version 2 was first released, which included a garbage collector and the ability to create and maintain multiple snapshots. This year (2009), the NiLFS(2) file system entered the mainline kernel and can be enabled simply by installing its loadable module.

An interesting aspects of NiLFS(2) is its technique of continuous snap-shotting. As NILFS is log structured, new data is written to the head of the log while old data still exists (until it’s necessary to garbage-collect it). Because the old data is there, you can step back in time to inspect epochs of the file system. These epochs are called checkpoints in NiLFS(2) and are an integral part of the file system. NiLFS(2) creates these checkpoints as changes are made, but you can also force a checkpoint to occur. As I show further down, checkpoints (recovery points) can be viewed as well as changed into a snapshot. Snapshots can be mounted into the Linux file system space just like other file systems, but currently they can be read-only. This is quite useful, as you can mount a snapshot and recover files that were previously deleted or check previous versions of files.

In addition to continuous snapshots, NiLFS(2) provides a number of other benefits. One of the most important from an availability perspective is quick restart. If the current checkpoint was invalid, the file system need only step back to the last good checkpoint for a valid file system. That certainly beats the fsck process.

Challenges of NiLFS(2)

Although continuous snapshot is a great feature, a cost is associated with it. The upside, as I mentioned, is that it’s log structured, so writes are sequential in nature (minimizing seek behavior of the physical disk) and thus very fast. The downside is that it’s log structured, and garbage collection is needed to clean up old data and metadata. Normally, the file system operates very quickly, but when garbage collection is required, performance slows down.

Exploring NiLFS(2)

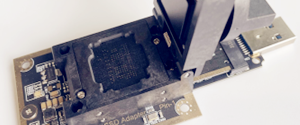

Let’s look at NiLFS(2) in action. This demonstration shows how to create an NiLFS(2) file system in a loop device (a simple method to test file systems), then looks at some of the NiLFS(2) features. Start by installing the NiLFS(2) kernel module:

![]()

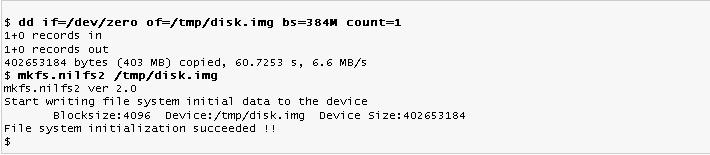

Next, create a file that will contain the file system (an area on the host operating system that you mount as its own file system through the loop device), then build the NiLFS(2) file system within it using mkfs (see Listing 1).

Listing 1. Preparing the NiLFS(2) file system

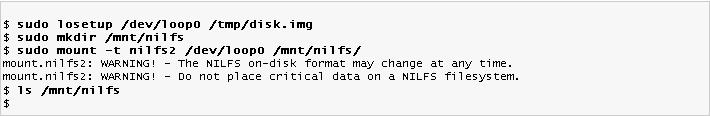

You now have your disk image initialized with the NiLFS(2) file system format. Next, mount the file system onto a mount point using the loop device (see Listing 2). Note that when the file system is mounted, a user-space program called nilfs_cleanerd is also started to provide garbage collection services.

Listing 2. Mounting NiLFS(2) using the loop device

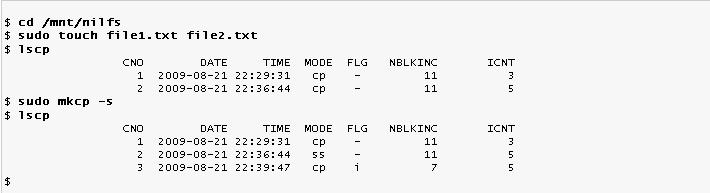

Now, add a few files to the file system, and then use the lscp command to list the current checkpoints available (see Listing 3). You define a snapshot using the mkcp command, and then look at the checkpoints again. At the second lscp, you can see your newly created snapshot (with all checkpoints and snapshots having a CNO, or checkpoint number).

Listing 3. Listing checkpoints and creating a snapshot

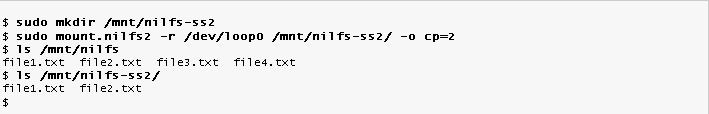

Now that you have a snapshot, add a few more files to your current file system, again with the touch command (see Listing 4).

Listing 4. Adding a few more files to your NiLFS(2) file system

Now, mount your snapshot as a read-only file system. You do this similarly to your previous mount, but in this case, you need to specify the snapshot to mount. You do this with the cp option. Note from your prior lscp that your snapshot was CNO=2. Use this CNO for the mount command to mount the read-only file system. Once mounted, you first ls your mounted read/write file system and see all files. In the read-only snapshot, you see only the two files that existed at the time of the snapshot (see Listing 5).

Listing 5. Mounting the read-only NiLFS(2) snapshot

Note that these snapshots persist once converted from checkpoints. Checkpoints can be reclaimed from the file system when space is needed, but snapshots are persistent.

This demonstration showed two of the command-line utilities for NiLFS(2): lscp (list checkpoints and snapshots) and mkcp (make checkpoint or snapshot). There’s also a utility called chcp for converting a checkpoint to a snapshot or vice-versa, and rmcpto invalidate a checkpoint or snapshot.

Given the temporal nature of the file system, NTT has considered some other very innovative tools for the future—for example, tls (temporal ls), tdiff (temporal diff), and tgrep (temporal grep). Introducing time-based functionality seems to be the logical next step.

The Extended Object File System (exofs)

The Extended Object File System (exofs) is a traditional Linux file system built over an object storage system. Exofs was initially developed by Avnishay Traeger of IBM and at that time was called the OSD file system, or osdfs. Panasas (a company that builds object storage systems) has since taken over the project and renamed it exofs (as its ancestry is from the ext2 file system).

A file system over an object storage system

Conceptually, an object storage system can be viewed as a flat namespace of objects and their associated metadata. Compare this to traditional storage systems based on blocks, with metadata occupying some blocks to provide the semantic glue. At a high level, exofs is built as shown in Figure 3. The Virtual File System Switch (VFS) provides the path to exofs, where exofs communicates with the object storage system through a local OSD initiator. The OSD initiator implements the OSD T-10 standard SCSI command set.

Figure 3. High-level view of the exofs/OSD ecosystem

The idea behind exofs is to provide a traditional file system over an OSD backstore. In this way, it’s easier to migrate to object-level storage, because the file system presented is a traditional file system.

File system mapping

Each object in an OSD is represented by a 64-bit identifier in a flat namespace. To overlay the standard POSIX interface onto an object storage system, a mapping is required. Exofs provides a simple mapping that is also scalable and extensible.

Files within a file system are represented uniquely as inodes. Exofs maps inodes to the object identifiers (OIDs) in the object system. From there, objects are used to represent all the elements of the file system. Files are mapped directly to objects, and directories are simply files that reference the files contained within the directory (as file name and inode-OID pairs). This is illustrated in simple form in Figure 4. Other objects exist to support things like inode bitmaps (for inode allocation).

Figure 4. High-level view of OSD representations

The OIDs used to represent objects within the object space are 64 bits in size, thereby supporting a large space of objects.

* Why object storage?

Object storage is an interesting idea and makes for a much more scalable system. It removes portions of the file system from the host and pushes them into the storage subsystem. There are trade-offs here, but by distributing portions of the file system to multiple endpoints, you distribute the workload, making the object-based method simpler to scale to much larger storage systems. Rather than the host operating system needing to worry about block-to-file mapping, the storage device itself provides this mapping, allowing the host to operate at the file level.

Object storage systems also provide the ability to query the available metadata. This provides some additional advantages, because the search capability can be distributed to the endpoint object systems.

Object storage has made a comeback recently in the area of cloud storage. Cloud storage providers (which sell storage as a service) represent their storage as objects instead of the traditional block API. These providers implement APIs for object transfer, management, and metadata management.

* What’s ahead?

Although NiLFS(2) and exofs will be interesting and useful additions to the Linux file system inventory, there’s more on the way. We’ve seen Btrfs introduced recently (from Oracle), which offers a Linux alternative to Sun Microsystems’ Zettabyte File System (ZFS). Another recent file system is Ceph, which provides a reliable POSIX-based distributed file system with no single point of failure. Today, we find a new log-structured file system and the introduction of a file system over an object store. Linux continues to prove that it’s the research platform of choice as well as an enterprise-class operating system.

Resource: http://www.ibm.com/developerworks/linux/library/l-nilfs-exofs/index.html

1 Comment

Keep posting stuff like this i really like it